Preamble

EXPLAIN ANALYZEis essential for PostgreSQL SQL statement optimization. Not all of it is attempted to be explained in this article. Instead, I'd like to give you a quick overview, outline what to look for, and demonstrate some helpful tools for visualizing the results.

If an SQL statement executes too slowly, you should understand why and how to resolve the issue. Being a declarative language as opposed to a procedural language, where you describe the result you want to get instead of how to calculate it, makes it more difficult to understand how the SQL engine uses its time. The EXPLAIN command shows you the execution plan that is generated by the optimizer. This is helpful, but typically you need more information.

You can obtain such additional information by adding EXPLAIN options in parentheses.

The most important EXPLAIN options

ANALYZE: with this keyword,EXPLAINdoes not only show the plan and PostgreSQL’s estimates, but it also executes the query (so be careful withUPDATEandDELETE!) and shows the actual execution time and row count for each step. This is indispensable for analyzing SQL performance.BUFFERS: You can only use this keyword together withANALYZE, and it shows how many 8kB-blocks each step reads, writes and dirties. You always want this.VERBOSE: if you specify this option,EXPLAINshows all the output expressions for each step in an execution plan. This is usually just clutter, and you are better off without it, but it can be useful if the executor spends its time in a frequently-executed, expensive function.SETTINGS: this option exists since v12 and includes all performance-relevant parameters that are different from their default value in the output.WAL: introduced in v13, this option shows the WAL usage incurred by data modifying statements. You can only use it together withANALYZE. This is always useful information!FORMAT: this specifies the output format. The default formatTEXTis the best for humans to read, so use that to analyze query performance. The other formats (XML,JSONandYAMLare better for automated processing.

Typically, the best way to call EXPLAIN is:

EXPLAIN (ANALYZE, BUFFERS) /* SQL statement */;

Include SETTINGS if you are on v12 or better and WAL for data modifying statements from v13 on.

It is highly commendable to set track_io_timing = on to get data about the I/O performance.

Caveats and limitations

You cannot use EXPLAIN for all kinds of statements: it only supports SELECT, INSERT, UPDATE, DELETE, EXECUTE (of a prepared statement), CREATE TABLE ... AS and DECLARE (of a cursor).

Note that EXPLAIN ANALYZE adds a noticeable overhead to the query execution time, so don’t worry if the statement takes longer.

The time it takes to execute a query will always vary slightly because data may not be available in cache when the query is first run. That’s why it is valuable to repeat EXPLAIN ANALYZE a couple of times and see if the result changes.

What information do you get from EXPLAIN ANALYZE?

A portion of the data is shown for each node of the execution plan, and a portion is shown in the footer.

EXPLAINwithout choices

Plain EXPLAIN will give you the estimated cost, the estimated number of rows and the estimated size of the average result row. The unit for the estimated query cost is artificial (1 is the cost for reading an 8kB page during a sequential scan). The startup cost (the price to return the first row) and the total cost (the price to return all rows) are the two cost values.

EXPLAIN SELECT count(*) FROM c WHERE pid = 1 AND cid > 200; QUERY PLAN ------------------------------------------------------------ Aggregate (cost=219.50..219.51 rows=1 width=8) -> Seq Scan on c (cost=0.00..195.00 rows=9800 width=0) Filter: ((cid > 200) AND (pid = 1)) (3 rows)

Output from the ANALYZE option

ANALYZEgives you a second parenthesis that includes the milliseconds of actual execution time, the actual number of rows, and a loop count that demonstrates how frequently that node was executed. Furthermore, it displays how many rows were removed by filters.

EXPLAIN (ANALYZE) SELECT count(*) FROM c WHERE pid = 1 AND cid > 200; QUERY PLAN --------------------------------------------------------------------------------------------------------- Aggregate (cost=219.50..219.51 rows=1 width=8) (actual time=4.286..4.287 rows=1 loops=1) -> Seq Scan on c (cost=0.00..195.00 rows=9800 width=0) (actual time=0.063..2.955 rows=9800 loops=1) Filter: ((cid > 200) AND (pid = 1)) Rows Removed by Filter: 200 Planning Time: 0.162 ms Execution Time: 4.340 ms (6 rows)

In the footer, you see how long PostgreSQL took to plan and execute the query. You can suppress that information with SUMMARY OFF.

Output from the BUFFERS option

This option shows how many data blocks were found in the cache (hit) for each node, how many had to be read from disk, how many were written and how many dirtied. In recent versions, the footer contains the same information for the work done by the optimizer, if it didn’t find all its data in the cache.

If track_io_timing = on, you will get timing information for all I/O operations.

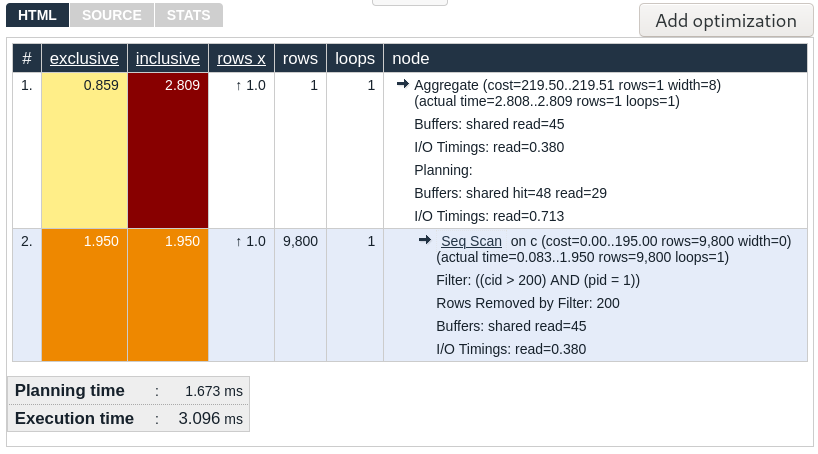

EXPLAIN (ANALYZE, BUFFERS) SELECT count(*) FROM c WHERE pid = 1 AND cid > 200; QUERY PLAN --------------------------------------------------------------------------------------------------------- Aggregate (cost=219.50..219.51 rows=1 width=8) (actual time=2.808..2.809 rows=1 loops=1) Buffers: shared read=45 I/O Timings: read=0.380 -> Seq Scan on c (cost=0.00..195.00 rows=9800 width=0) (actual time=0.083..1.950 rows=9800 loops=1) Filter: ((cid > 200) AND (pid = 1)) Rows Removed by Filter: 200 Buffers: shared read=45 I/O Timings: read=0.380 Planning: Buffers: shared hit=48 read=29 I/O Timings: read=0.713 Planning Time: 1.673 ms Execution Time: 3.096 ms (13 rows)

How to read EXPLAIN ANALYZE output

You can see that even with straightforward queries, you end up with a lot of data. You must be proficient at reading it in order to get useful information.

First, you need to know that a PostgreSQL execution plan is a tree with a number of nodes. Lower nodes are indented and begin with an arrow (->), while the top node (the Aggregate above) is at the top. Nodes on the same level as each other have the same indentation (for example, the two relations combined with a join).

PostgreSQL works from the top down, so it starts by making the first result row for the top node. The executor processes lower nodes “on demand.” This means that it only gets the number of result rows from each lower node that are needed to calculate the next result from the top node. The startup time for the upper node is at least as long as the startup time for the lower nodes, and the same is true for the total duration, which has an impact on how you must interpret the terms “cost” and “time.” You must deduct the time spent in the lower nodes from the net time spent in each node. That becomes considerably more challenging with parallel queries.

Additionally, in order to calculate the overall amount of time spent in a node, you must multiply the cost and time by the quantity of “loops.”

What to focus on in EXPLAIN ANALYZE output

- Take a look at the nodes that were used the most during the execution process.

- Discover the lowest node where the estimated row count differs significantly from the actual row count. This is frequently the source of poor performance, and long execution times elsewhere are only the result of bad plan decisions based on bad estimates.

- Locate lengthy sequential scans that have a filter condition that eliminates a large number of rows; these are good candidates for an index.

Tools to interpret EXPLAIN ANALYZE output

A longer execution plan can be challenging to read, so a few tools have been developed to try to represent this “sea of text”:

Depesz’ EXPLAIN ANALYZE visualizer

You can find this tool at https://explain.depesz.com/. The following output will appear if you paste the execution plan into the text box and click “Submit”:

The execution strategy resembles the original somewhat but is more aesthetically pleasing. There are helpful add-on characteristics:

- The nodes with the highest times are highlighted with a red background and are easy to spot. Total execution time and net execution time are calculated and shown for each node.

- Bad estimates are highlighted with a red background, and you can see what factor PostgreSQL overestimated or underestimated the number of rows under the heading “rows x”.

- Clicking on a node makes everything beneath it invisible, allowing you to ignore uninteresting sections of a lengthy execution plan and concentrate on the crucial ones.

- It is simple to find them in a large execution plan because all of a node’s immediate children are highlighted with a star when you hover over a node.

What I particularly like about this tool is that all the original EXPLAIN text is right there for you to see, once you have focused on an interesting node. The look and feel is decidedly more “old school” and no-nonsense, and this site has been around for a long time.

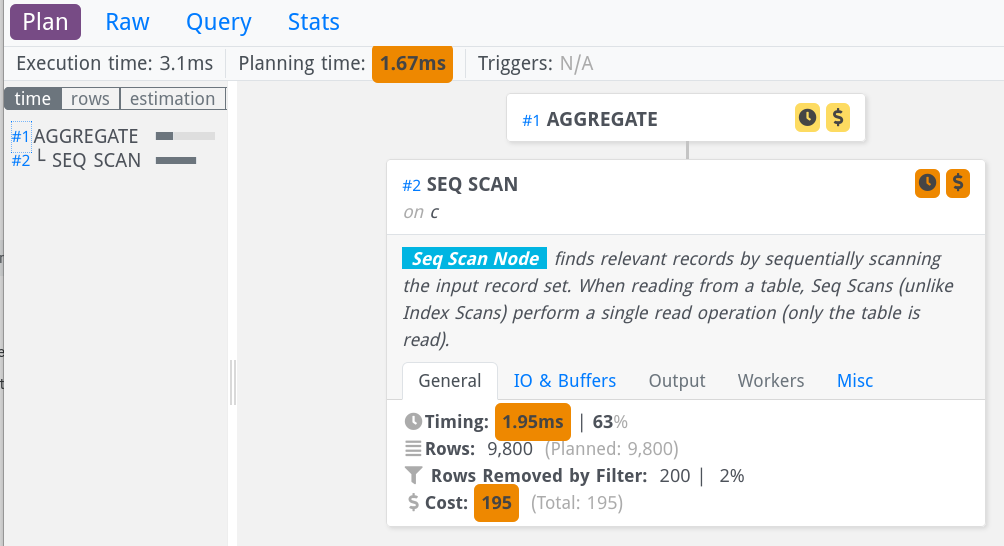

Dalibo’s EXPLAIN ANALYZE visualizer

Visit https://explain.dalibo.com/ to access this tool. You once more paste the unaltered execution plan and click “Submit.” The output is presented as a tree:

The details are initially hidden by the display; however, by clicking on a node, as I have done with the second node in the image above, you can reveal them. You can navigate to the right side to view more information from the left side’s brief overview of all nodes. Features that increase value include:

- You can concentrate on the most expensive nodes by using the bars on the left side of the screen, which show the relative net execution time.

- You can choose “estimation” on the left side of the screen to see how much PostgreSQL over- or under-estimated the number of rows for each row.

- Finally, you can click on “buffers” to see which nodes used the most 8kB blocks; this information is useful because it identifies nodes whose execution time will be affected by the caching quality of the data.

- By clicking on a node on the right side, you can expand it and access all the detailed information in different tabs.

- The “crosshair” icon, which is tucked away in a node’s lower right corner, can be used to collapse everything beneath a node in the tree.

The nice thing about this tool is that it makes the execution plan’s tree structure very visible. The appearance and feel are more modern. The drawback is that the detailed information is somewhat hidden, so you have to know where to look for it.

Conclusion

EXPLAIN (ANALYZE, BUFFERS) (with track_io_timing turned on) will show you everything you need to know to diagnose SQL statement performance problems. To keep from drowning in a sea of text, you can use Depesz’ or Dalibo’s visualizer. Both provide roughly the same features.

About Enteros

Enteros offers a patented database performance management SaaS platform. It finds the root causes of complex database scalability and performance problems that affect business across a growing number of cloud, RDBMS, NoSQL, and machine learning database platforms.

The views expressed on this blog are those of the author and do not necessarily reflect the opinions of Enteros Inc. This blog may contain links to the content of third-party sites. By providing such links, Enteros Inc. does not adopt, guarantee, approve, or endorse the information, views, or products available on such sites.

Are you interested in writing for Enteros’ Blog? Please send us a pitch!

RELATED POSTS

Optimizing Enterprise Performance in the Telecom Sector: How Enteros Drives Cloud FinOps Excellence

- 17 April 2025

- Database Performance Management

In the fast-evolving world of finance, where banking and insurance sectors rely on massive data streams for real-time decisions, efficient anomaly man…

Accelerating Performance Growth in the Insurance Sector with Enteros: Uniting Database Optimization and RevOps Efficiency

In the fast-evolving world of finance, where banking and insurance sectors rely on massive data streams for real-time decisions, efficient anomaly man…

Harnessing Enteros and Generative AI to Empower Database Administrators in the Hospitality Sector through a Scalable SaaS Platform

- 16 April 2025

- Database Performance Management

In the fast-evolving world of finance, where banking and insurance sectors rely on massive data streams for real-time decisions, efficient anomaly man…

Driving Cost Attribution and Performance Efficiency in the Travel Sector with AIOps and Cloud-Based Database Platforms

In the fast-evolving world of finance, where banking and insurance sectors rely on massive data streams for real-time decisions, efficient anomaly man…