Five easy steps to improve your database performance

Most programs have database access as a standard feature. Simple to improve database performance tweaking appears to be able to considerably speed up at least 80% of all apps we examine.

Fortunately, there isn’t much rocket science involved until you get far into database tuning. When you’re ready to take database optimization to the next level, several excellent options are available, including those from our friends at Vivid Cortex. However, for this article, we’ll solely discuss quick victories that you may acquire without the assistance of other parties.

Step 1. Is your database server healthy?

First and foremost, be sure that the server serving your database process has adequate resources. It includes the processor, memory, and storage space.

CPU

Although the CPU is unlikely to be a bottleneck, database servers constantly pressure machines. Ensure that the host has at least two CPU cores available to keep it responsive. I’m going to assume that some of your hosts virtualize. When monitoring virtual machines, it’s good to keep an eye on the virtual host that the devices are running. Individual virtual machine CPU metrics do not provide a complete picture. Numbers such as CPU ready time are significant since they indicate how much CPU time the virtual machines can use.

The number of CPUs has an impact on licensing and technical concerns. You should ensure that your database server license nearly matches the number of CPUs. Otherwise, you risk overspending or not getting the most out of your hardware. When allocating CPU time to virtual machines, CPU ready time is a significant component.

Memory

Keep in mind that memory consumption isn’t the sole measure to monitor. Memory consumption does not indicate how much more memory is required. The figure to pay attention to is page faults per second. Regarding your host’s memory requirements, page faults are the accurate indicator. Thousands of page errors per second means your hosts are out of memory (you’ll hear your server’s hard disk grinding away at this point).

Space on the hard drive

Databases take up a LOT more disk space than the data itself, thanks to indices and other efficiency enhancements (indices, you know). NoSQL databases (such as Cassandra and MongoDB) need more disk space than you might think. While MongoDB uses less RAM than a traditional SQL database, it consumes a lot of disk space. I can’t stress that it is highly critical to ensure your database server has enough storage. Also, make sure your database is running on dedicated hard drives to avoid disk fragmentation caused by other activities.

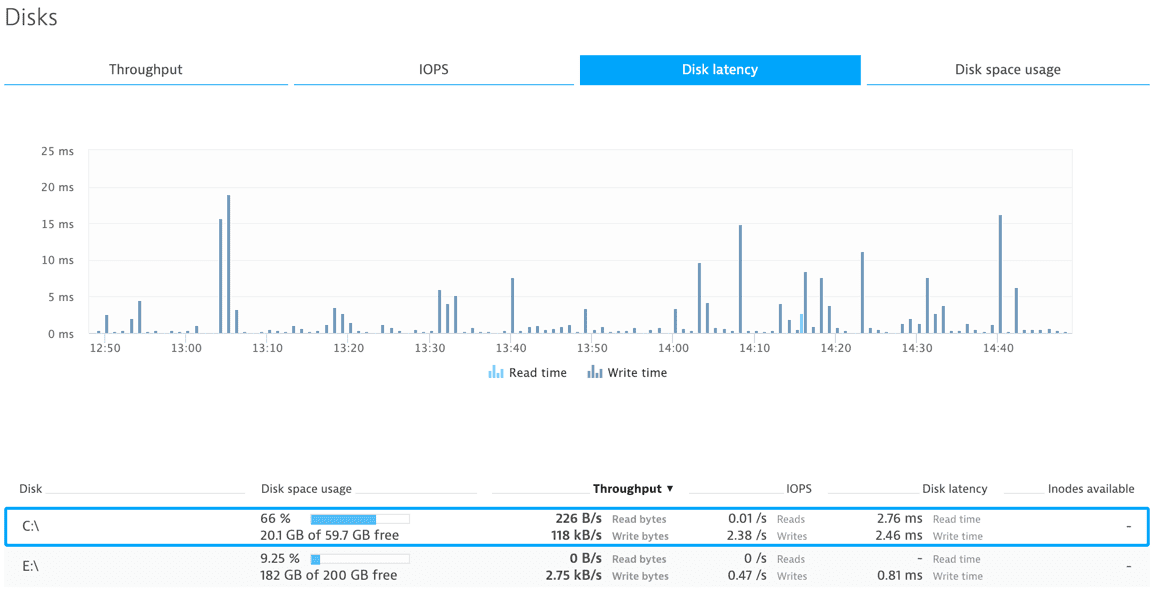

Disk delay is a sign of an overburdened hard drive. One number to keep an eye on is disk latency. As the load on the hard drive grows, disk latency will increase, resulting in a decline in database performance. What can you do in this situation? First, take advantage of your application’s and database’s caching methods to the most extent possible. There isn’t a more efficient or cost-effective technique to move the needle.

If that still doesn’t get you the performance you want, you may permanently install more hard drives. Simply mirroring your hard drives can increase read performance. The written version improves by using RAID 1 or RAID 10 instead of RAID 6. If you need to get your hands dirty with this topic, read up on disk latency and I/O issues.

If that doesn’t work, consider Solid State Drives. Make sure you use an optimized model for database use, as databases use more read/write cycles than most other applications. Despite falling prices, they are still more expensive than ordinary hard disks. However, if you require a performance bump, they are well worth the money.

Step 2. Who is accessing the performance of the database?

Following the installation of your database on healthy hardware, you should examine which applications are accessing the database. If one of your apps or services has poor database performance, don’t assume you know which application or service is to blame. Finding database performance bottlenecks requires knowing which services access a database.

There are two distinct issues to consider regarding poor database performance. On the one hand, the entire database could be affected. On the other hand, there could be a single client who isn’t performing well. Check if your server is healthy and if all of the database’s customers are experiencing poor performance. Likely, your hardware isn’t up to the task. If only one service is experiencing slow database response times, delve deeper into that service’s analytics to figure out what’s causing the issue.

Understand the load and individual response time of each service instance

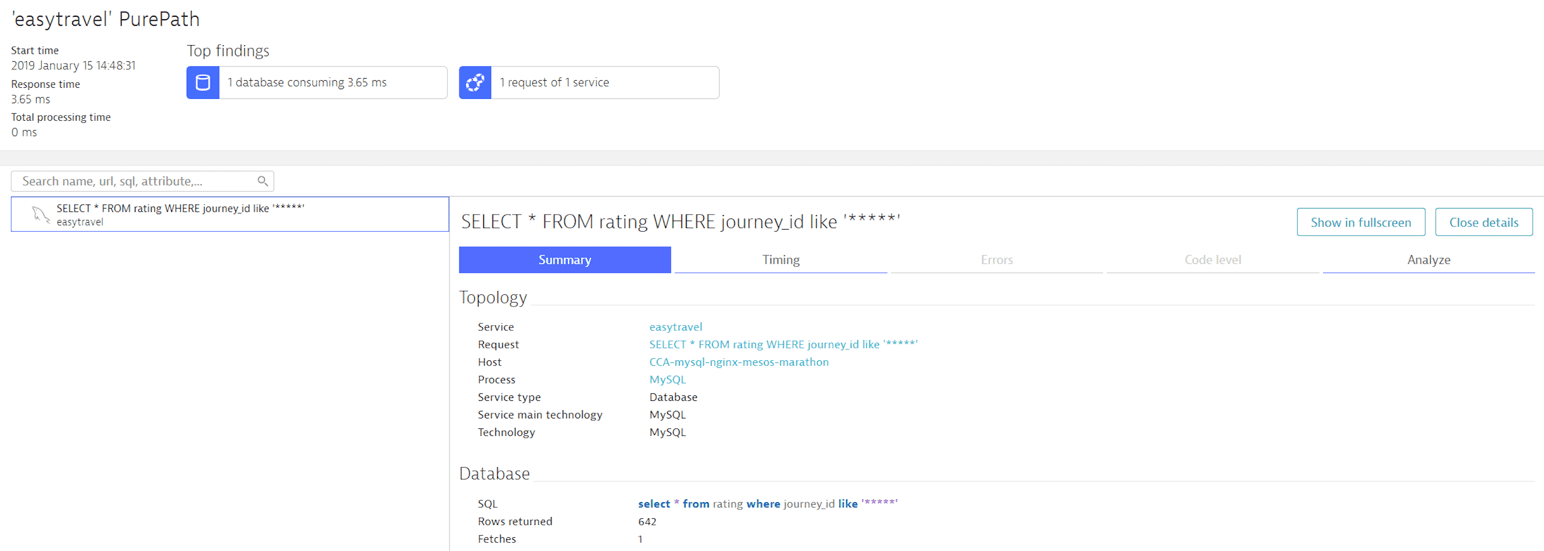

If a particular service is having issues with the database, you should investigate the service’s communication with the database. Which queries are carried out? How frequently are the questions run per request? What is the number of rows they return?

You should know which commands have the most impact on the performance of the database. Also, if you’re running multiple instances of a single service (which you should), see if all of them or just a few are affected. If services install redundantly, it may detect different database performances between instances. It’s crucial to remember that database-related problems could have their origins elsewhere. There is frequently an issue with how a database accesses.

Examine the frequency with which each request’s queries call. By increasing your service’s database cache, you might be able to reduce the number of actual database requests. Should question everything. Is there any reason you should run a query several times for each request? If there is, efficient caching tactics may be able to help you unlock some potential performance.

Do you have enough database connections?

Even if your database querying method is flawless, you may still encounter poor database performance. If this is the case, it’s time to double-check that the database connection in your application is sized correctly.

How many round trips are required between your service and the database?

There are two things to think about when setting a connection pool:

1) How many connections can the database manage at one time?

2) How big should your connection pool be for your application?

Why don’t you make the connection pool the most significant possible? Because your application could not be the only one accessing the database. The database server will not be able to function as expected if your application consumes all of the connections. On the other hand, if your application is the only one linked to the database, go for it!

And what’s the ideal route for figuring out how many contacts I can make?

Step #1 confirmed that your database server is in good working order. The database’s resources determine the maximum number of database connections. As a result, progressively raise the load and the number of allowed links to your database to find the maximum number of connections. Keep an eye on the metrics of your database server while you’re doing this. You’ve reached the limit when they max out, whether CPU, RAM, or disk performance. If the amount of connections you have access to is insufficient for your application, it’s time to upgrade your hardware.

Determine the optimal connection pool size for your application.

The amount of parallel demand your application applies to the database server equals the number of allowed concurrent connections to your database. Here are a few tools to help you calculate the exact cost. Even if your database server is in good shape, more load will result in longer transaction response times. Measure the transaction response time from beginning to end to discover if Connection Acquisition takes up more time as the load increases. If it does, then your connection pool has been depleted. If it doesn’t, check the analytics on your database server to see the maximum number of connections your database can handle. According to a decent rule of thumb, the size of a connection pool should be stable, not variable. As a result, make the minimum and maximum pool sizes the same.

Don’t forget about the network.

We often overlook the physical limits that our virtualized infrastructure faces. Despite this, there remain physical limitations: cables fail, and routers fail. The distinction between what works and what doesn’t changes constantly. It is why it’s essential to monitor your network measurements. Suppose difficulties occur after months or even years of flawless operation. In that case, your infrastructure is likely suffering from a non-virtual, physical issue. Examine your routers, cables, and network interfaces for any problems. It’s better to do this as soon as you notice the first signs of a problem because this may be the only time you have to repair a problem before it affects your business.

Retransmissions have a significant impact on network performance. Overworked processes frequently start dropping packets owing to a lack of resources. If a hardware failure doesn’t cause your network problem, process-level visibility can help you locate a failed component.

Database performance wrap-up.

Databases are complex applications that don’t mean to fail or perform poorly. To work at their optimum, ensure your databases are hosted and resourced securely.

To improve your database, you’ll need the following:

- Data from the server to determine the health of the host

- Metrics on the hypervisor and virtual machines to confirm that your virtualization is working correctly.

- Application data uses to speed up database access.

- Data from the network to examine the influence of database communication on the web.

About Enteros

Enteros offers a patented database performance management SaaS platform. It proactively identifies root causes of complex business-impacting database scalability and performance issues across a growing number of RDBMS, NoSQL, and machine learning database platforms.

The views expressed on this blog are those of the author and do not necessarily reflect the opinions of Enteros Inc. This blog may contain links to the content of third-party sites. By providing such links, Enteros Inc. does not adopt, guarantee, approve, or endorse the information, views, or products available on such sites.

Are you interested in writing for Enteros’ Blog? Please send us a pitch!

RELATED POSTS

Optimizing Supply Chain Efficiency with Enteros: Leveraging Software Solutions, Generative AI, and Cloud FinOps for Cost-Effective Performance

- 18 March 2025

- Database Performance Management

In the fast-evolving world of finance, where banking and insurance sectors rely on massive data streams for real-time decisions, efficient anomaly man…

Optimizing Insurance Sector Databases with Enteros: An AI-Driven AIOps SaaS Solution

- 17 March 2025

- Database Performance Management

In the fast-evolving world of finance, where banking and insurance sectors rely on massive data streams for real-time decisions, efficient anomaly man…

Enhancing Database Performance in the Technology Sector with Enteros Logical Models

In the fast-evolving world of finance, where banking and insurance sectors rely on massive data streams for real-time decisions, efficient anomaly man…

Optimizing Marine Industry Database Performance and Cloud Resources with Enteros Cloud FinOps Solutions

- 16 March 2025

- Database Performance Management

In the fast-evolving world of finance, where banking and insurance sectors rely on massive data streams for real-time decisions, efficient anomaly man…